Volkswagen’s own Life Cycle Assessment claims the Golf Diesel is about the same “sustainable” as their fancy ID.3. Airbus (finally) admits that their first hydrogen airplanes won’t be there by 2035, demanding all new storage and logistics. An aircraft maker senior official just this month again disqualified electric flying as for years to come being constrained by 50 passengers for 200 miles or a trade-off between the two, but nothing for mass air transport. And Europe refocuses on a war and threats from the East and an erratic president in the West, shifting away from climate action or sustainability. Some more detail thoughts and a reality check on climate friendly mobility as Food for Thought.

Table of Content

Yeah, it’s been a while. I had a lot on my plate, moving into a nice old town house, smartifying it, modernizing. At the same time looking for and managing jobs that keep me afloat, managing Airportinfo and still seeking potential investors for Kolibri.

And in the recent weeks had some more learning curves, about claims and the harsh reality check hitting my own life with brute force.

While Sustainable Mobility is something of importance to me, there’s two major areas that impact my life rather directly. Car Mobility. And Flying. Though keep in mind that “sustainability is about 17 SDGs, not just climate. But let’s focus on the famous “climate side” of it today.

So let me share two findings on each today. And yes, I do appreciate if you disagree, comment or call me to discuss. But so far, I get a lot of support from other “activists” focusing on sustainable mobility.

The Electric Cars Lie

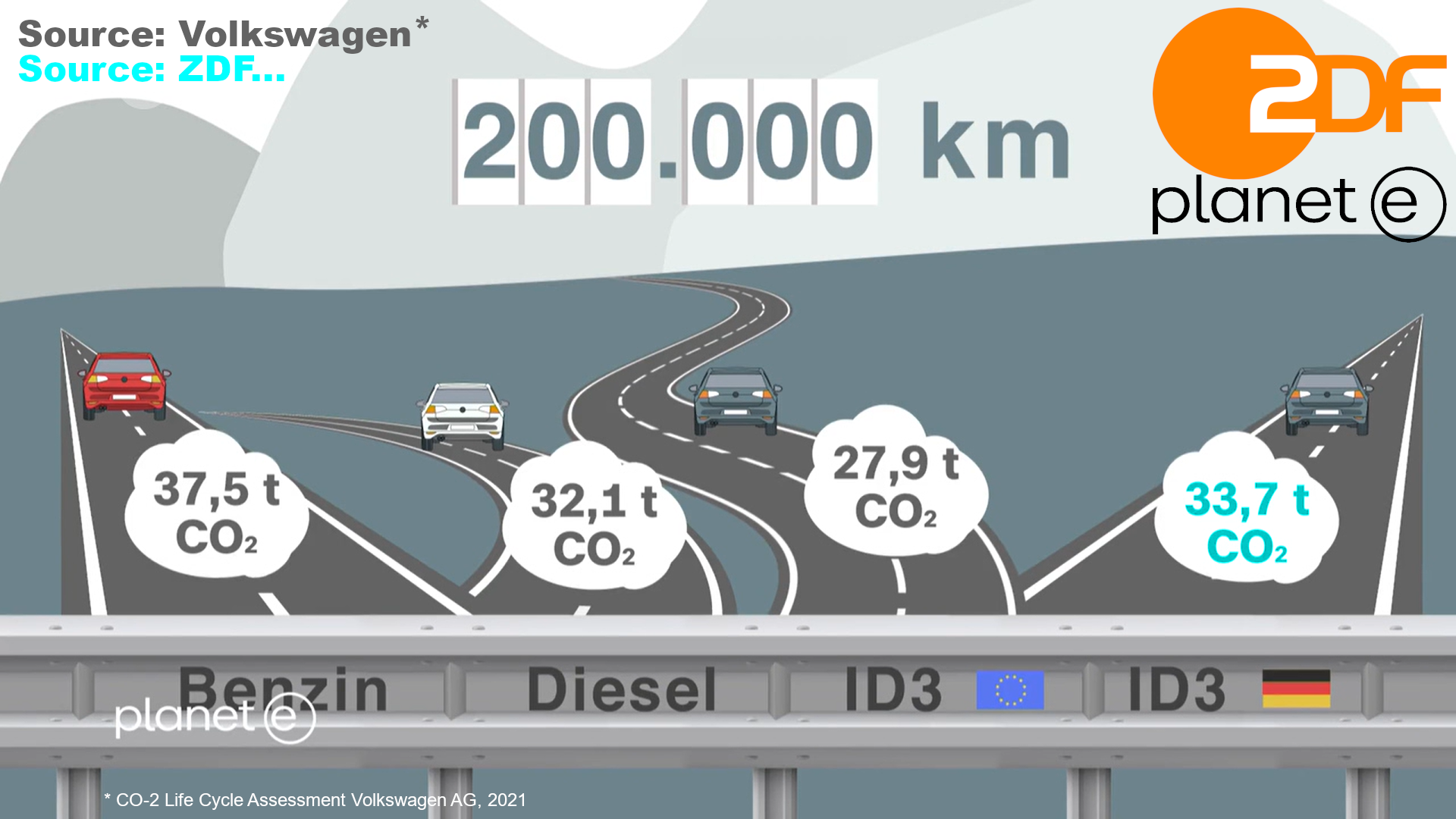

While developing Kolibri, for our company ground mobility, we looked into (ground-based) e-Mobility. You may remember the Volkswagen own life-cycle assessment I shared before on their ID3 vs. the Volkswagen Golf:

When The Numbers Don’t Compute: Braunschweig

A Real-World Example…

Now it happens, that I live with my family in a satellite suburb of Braunschweig (English: Brunswick), location of Germany’s Research Airport. Close by Volkswagen-Hometown of Wolfsburg, Braunschweig is also a very Volkswagen dependent city. And yes, I drive a car with a combustion engine. Say what?

But yes, investing now into solar power for my own use, I just checked again, if I have the possibility to turn to an electric car. Have one of those fancy Wall Boxes…? But. Naaaaw. What’d you’re thinking?!

In this suburb I live together with some 24 000 others, the entire area does not have “own parking”. Almost all (98%) of the buildings have no own car parking but the cars are parked (like in many cities) “on the street”. Between the house/garden and the parking area on the street is the walkway. Which you may not “block” by putting a cable from your house to the car to load it. Technically it’s neither easily possible, nor is it at all allowed, to add a parking space in the garden. Or the front yard. Naaaaw. What’d your’re thinking?!

City Claims Fact Check: Climate Neutral by 2030

So I reached out to the city’s “Sustainability Pros”. A city with a population of some 250 000 (so almost 10% in our satellite suburb). Asking what their plans are for their claim to become climate neutral by 2030. How they support me – aside no more investment help for roof photovoltaic (PV). But. Naaaaw. What’d you’re thinking?! Noooo, there are no plans on how I could ever use own PV to load my car. But hey, they are going to invest into a “massive” 500 loading stations across Braunschweig by 2028, right. No. To load … what?

Official statistics: On 1 000 people, there are 980 registered cars in Germany. So we talk about some 245 000 cars in Braunschweig. We talk about 80-90% not having their own loading infrastructure (even if they want to). So in the best case (I guess worse), 200 000 cars. We talk about what? 500 loading stations in Braunscheig? Roughly 400 cars sharing the same loading stations? You got to be kiddin’, right?

Aside the fact that the power cost is minimum double of what I’d pay if I could use my own PV-power through a wallbox. And see below on the issue of the loading cycle.

And don’t believe this is an isolated case, it’s the same all over Europe. Guess why the number of cars with combustion engines are still outnumbering electric drive. Only 2.1% of the cars registered in Germany are electric. And recently the numbers of combustion engine powered registrations is on the rise again.

Climate Neutral by 2030? Maybe 2050? Naaaaw. What’d you’re thinking?!

Reality Check: Forget about it.

Loading Cycle

Loading takes 30 minutes fast charging (high strain on the battery, shortened life-expectancy) to about four hours in average (German Automobile Club ADAC). Frequently a problem during summer vacation, long lines of EVs (electric vehicles) waiting for their turn at the charging station. Then sitting at the truck stop for three, four hours before they can continue. Good business for the truck stop.

Loading takes 30 minutes fast charging (high strain on the battery, shortened life-expectancy) to about four hours in average (German Automobile Club ADAC). Frequently a problem during summer vacation, long lines of EVs (electric vehicles) waiting for their turn at the charging station. Then sitting at the truck stop for three, four hours before they can continue. Good business for the truck stop.

Now residents want to park their car and charge over night. Not leave the parking lot after four hours (in the middle of the night) to allow the neighbor to load.

Reality Check: Forget about it.

Car Life-Cycle vs. e-Mobility

Today, an average car is used for 10-20 years in Europe, then exported to other countries where those “old cars” are still in great demand. So a car in average has a life-cycle of 20-30 years. But Germany, as an industry country with supposedly “better” infrastructure than most, still sells only a small fraction (2-3%) of the cars with electric engine, the other 97-98% are combustion powered. End of the Combustion Engine by 2035? Without serious, practicable plans for loading infrastructure capable of the mass demand? #greenwashing

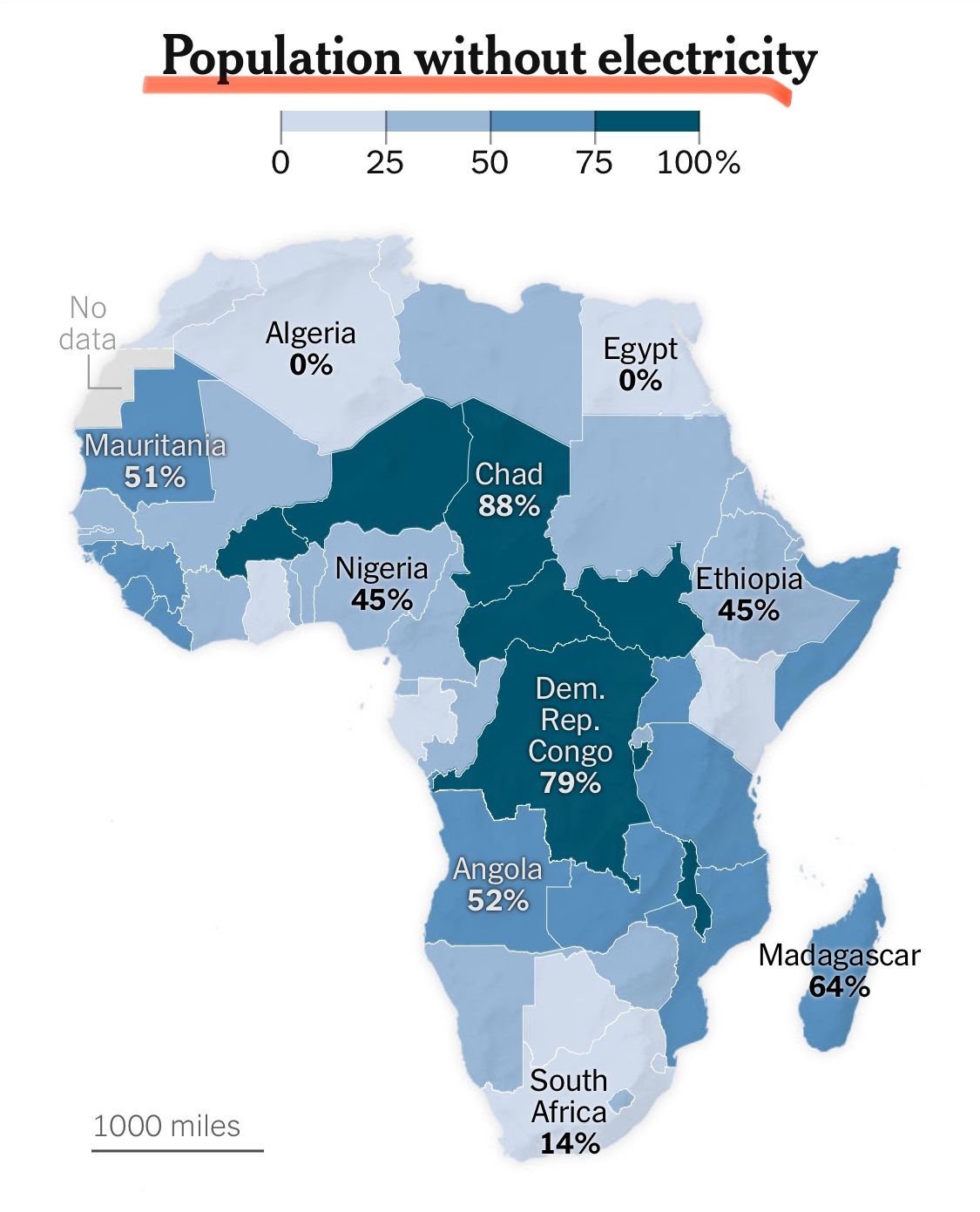

And in the “less sophisticated countries”, how do you get the electricity “powered up” to supply the loading? That ain’t just about Africa, that’s true for rural areas even within the mighty EU! The image was published by New York Times just recently.

Reality Check: Forget about it.

Side Issue Braunschweig: The Green District Heating Lie

This is also quite in line with another self-deception here. “District Heating is green”. Just that in Braunschweig it’s (well hidden) 71.4% fossil (coal, “natural” gas, oil), remaining 28,6 wood waste… Green like in #greenwashing! Yes, they have big plans. Especially to extend their reach. And use “natural gas” and extend use of wood, resulting in more trucking. Or use surplus heat from steel mills in the region. Who cares, where that energy comes from…

Reality Check: Forget about it.

My other big topic on sustainable mobility is

Climate Friendly Flying

And inside Climate Friendly Flying, there were some rather frustrating news, mostly ignored by general media. Talking to them, I got the response as it not being noteworthy, as (aside me) who would believe in climate friendly flying to happen in the next 25 years anyway… Yes. That hurt. But it’s true and in line with my own experience. So let’s quickly look at that.

Electric Flying

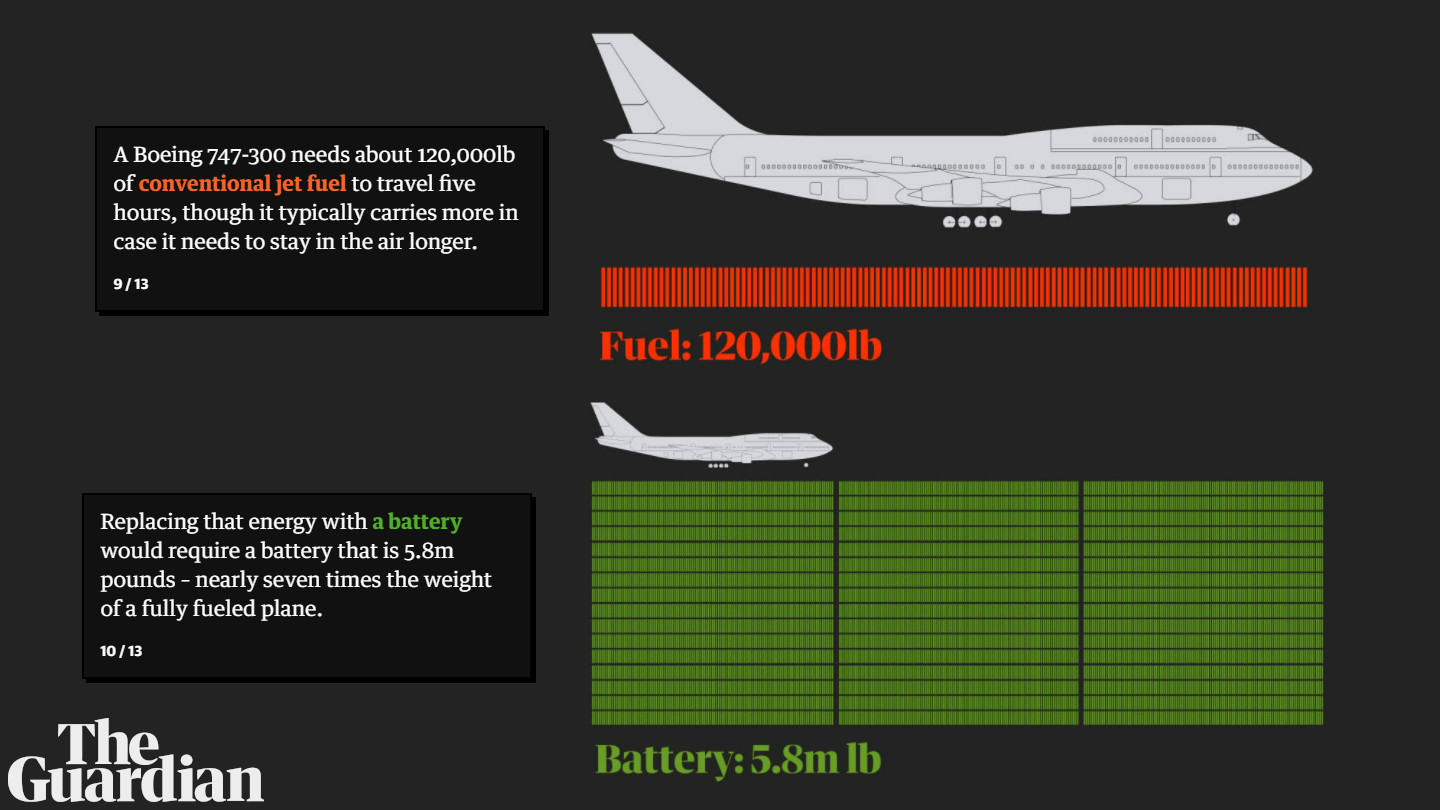

The issue with electric flight remains very much as it was back in 2019, when Boeing pulled out of their venture with Zunum.aero. There was a statement this month by an aircraft maker’s senior manager (yes, I know who), relating to the 2019 statement by then Boeing CEO Dennis Muilenburg that they would be at best make it to fly 50 passengers and no freights for 200 miles (300 kilometers). Possibly able to trade capacity with range. Nevertheless, nowhere near a “mass market.

The issue with electric flight remains very much as it was back in 2019, when Boeing pulled out of their venture with Zunum.aero. There was a statement this month by an aircraft maker’s senior manager (yes, I know who), relating to the 2019 statement by then Boeing CEO Dennis Muilenburg that they would be at best make it to fly 50 passengers and no freights for 200 miles (300 kilometers). Possibly able to trade capacity with range. Nevertheless, nowhere near a “mass market.

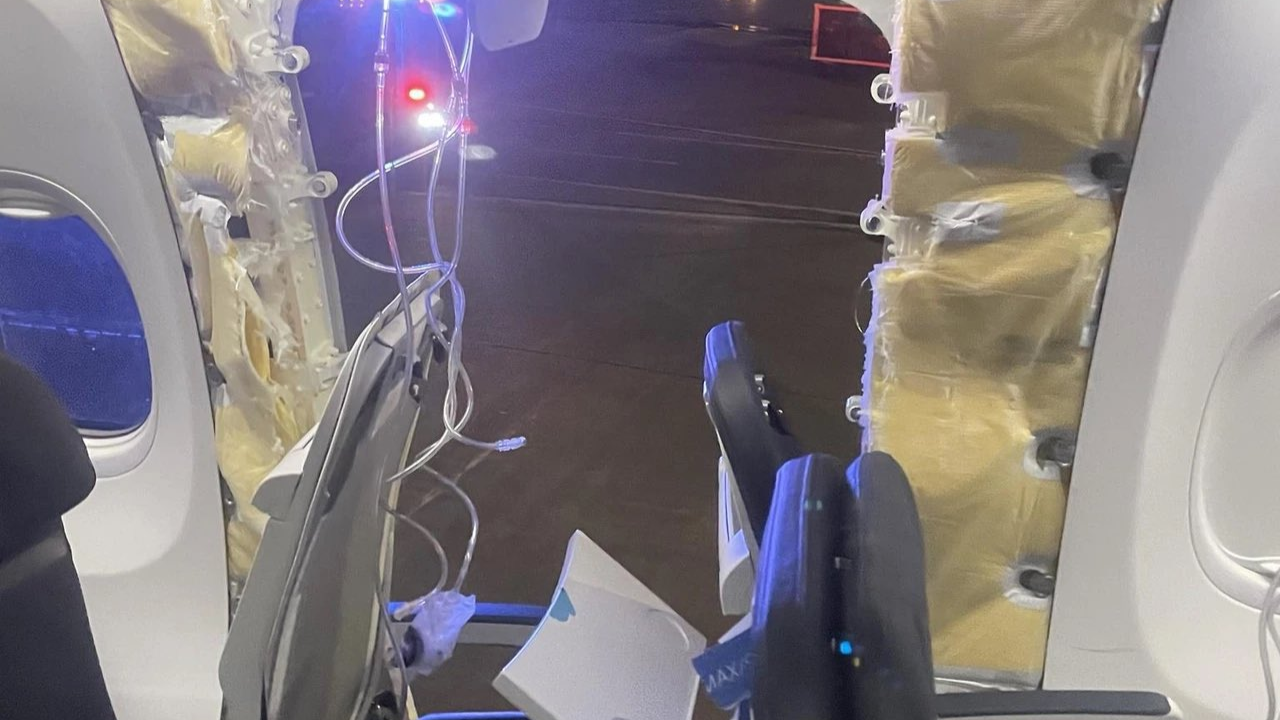

Even giving developments of new batteries with higher energy density, that senior official and other experts in the recent academic video conference call on battery development warned quite emphatically about the increasing risks relating to the high altitude flying and pressure changes straining those batteries. Their assumption was that flight certified batteries would not benefit in the near (or not so near) future from those density improvements. Using the examples of the Samsung Galaxy Note 7, proving also that the first events unfolded airborne, due to the additional strain changing altitude (air pressure) rather quickly. As well as the Boeing 787 door batteries that caught fire, attributed to “new batteries with higher energy density”. He questioned the ability to improve “quickly” on the range/load, as higher density batteries will pose additional risk to aircraft safety and such would demand a lot of additional testing for flight certification.

Reality Check: Forget about it. 50 passengers. 200 miles. Max.

Air Taxi

I do hope you know my Whitepaper on Air Taxi. Three reasons, why Air Taxi IMHO is a ruse. Vertical take-off and landing (VTOL) is the most energy inefficient mode of air transport. Aside that there are helicopters covering that niche. Second, individual transport is the most energy inefficient mode transport. Third, air traffic control is already often on the brink of collapse, now add thousands of air taxis transporting potential travelers in and out of the restricted airport air space. Or collide midair over a populated area like Manhattan?

I do hope you know my Whitepaper on Air Taxi. Three reasons, why Air Taxi IMHO is a ruse. Vertical take-off and landing (VTOL) is the most energy inefficient mode of air transport. Aside that there are helicopters covering that niche. Second, individual transport is the most energy inefficient mode transport. Third, air traffic control is already often on the brink of collapse, now add thousands of air taxis transporting potential travelers in and out of the restricted airport air space. Or collide midair over a populated area like Manhattan?

The recent insolvencies of Lillium and Volocopter haven’t come at a surprise to anyone having followed their expensive developments.

Reality Check: Forget about it.

“Funny” (telling): After their friends at Earlybird Capital just lost quite some money on Air Taxi, World Fund now celebrates their “green” investment into electric flight. Sorry that I am not elated. You know my (justified) take on Electric Flying and Air Taxi.

Hydrogen Powered Flights

As I wrote some years ago in my whitepaper about the Road to Environementally-Friendly Flying, there are quite many setbacks about hydrogen flying. Namely NOx being a problem known in the academic research groups, but far bigger and quite logical, it’s an issue of (green) source, refining, logistics, storage and then use. As someone in a video conference on the topic asked. Talking about liquid, ultra-cold hydrogen, what happens if the tank leaks (very hard landing, crash)? Shock-frosted passengers?

As I wrote some years ago in my whitepaper about the Road to Environementally-Friendly Flying, there are quite many setbacks about hydrogen flying. Namely NOx being a problem known in the academic research groups, but far bigger and quite logical, it’s an issue of (green) source, refining, logistics, storage and then use. As someone in a video conference on the topic asked. Talking about liquid, ultra-cold hydrogen, what happens if the tank leaks (very hard landing, crash)? Shock-frosted passengers?

Even since back in 2020, many in academic research questioned the ambitious time lines used in aviation, giving that a first prototype is most likely not available before 2035, first aircraft in best case making it to a fleet (in small numbers) by 2040, more likely 2045. Given the challenges in storage and logistics, hydrogen in best case becoming “mainstream” not before 2080, more likely in the next century!

Airbus just a month ago finally admitted to those facts by confirming a “delay” in hydrogen development, here a source by Reuters.

Reality Check: Forget about it.

Energiewende (Energy Transition)

A Limited View

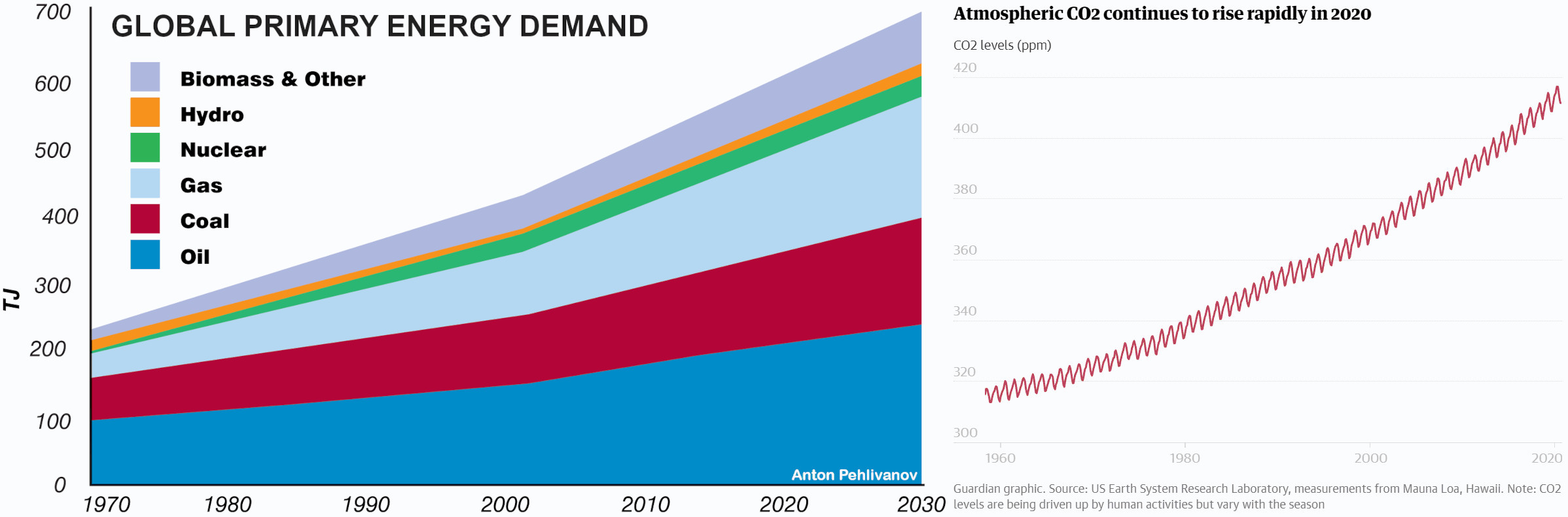

As I mentioned in the Sustainability Energy Dilemma, the lobbyists intentionally work with incomplete energy demands, intentionally ignoring that in the end it all boils down to energy. Be it electric power, electric cars, but also trains, electric flying, … or the refining of fuel replacements, be they hydrogen or synfuels:

If you move a body from A to B, it requires one thing and one thing only: Energy. Basic Physics!

So for example. The German Research Institute for the Energy Industry (FfE) is a lobby body “supporting” the German government on the Energiewende. They work out the food the politicians then work with, to plan the energy transition towards green energies. But in their studies they intentionally ignored and ignores those needs. “The need for green fuels for Europe because of aviation such exceeds the defined [fuel] amounts defined […] those values were of secondary relevance, as we assumed an import of these fuels“. You. Got. To. Be. Kidding. Right?

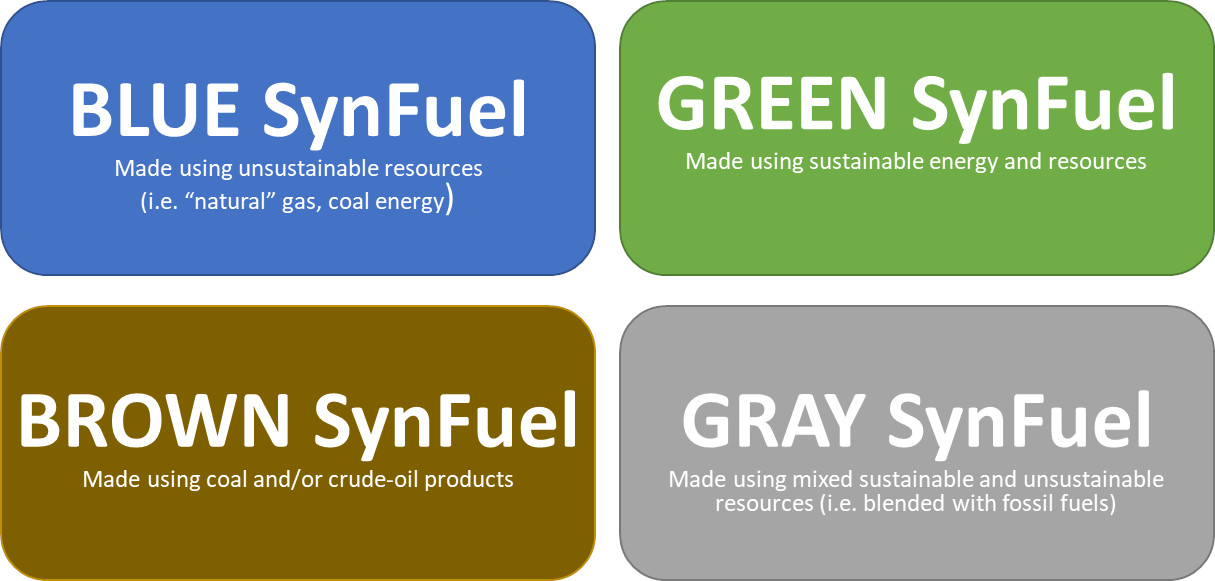

What truly upsets me, is the likes of World Fund or European Investment Bank disqualifying Kolibri for our strategy based on green SynFuels, as their “study” disqualifies it. A study full of false lobby arguments, starting already in the naming using “electrofuels”. We use “synthetic”. No-one in his/her right mind would call hydrogen “electro”, just because you need energy to refine it! But their researchers call it “electrofuels”? And even their study says, that if you have a business case, their negative assumptions might require reconsideration…

What truly upsets me, is the likes of World Fund or European Investment Bank disqualifying Kolibri for our strategy based on green SynFuels, as their “study” disqualifies it. A study full of false lobby arguments, starting already in the naming using “electrofuels”. We use “synthetic”. No-one in his/her right mind would call hydrogen “electro”, just because you need energy to refine it! But their researchers call it “electrofuels”? And even their study says, that if you have a business case, their negative assumptions might require reconsideration…

Reality Check: Forget about it.

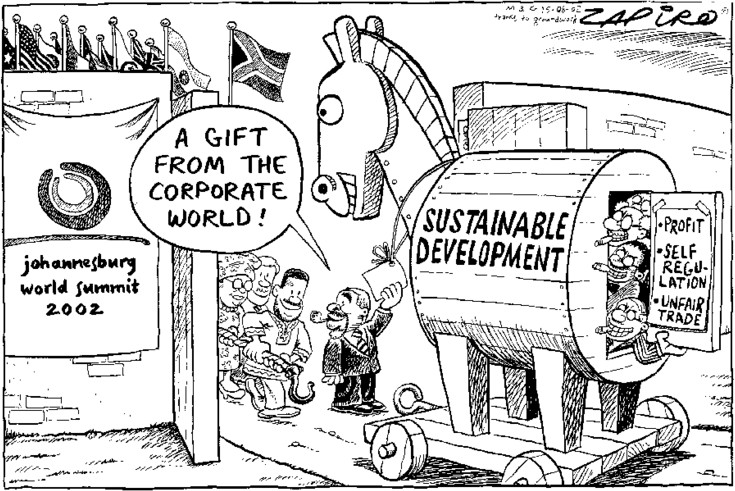

Impact Investor = Greenwashing?

One impact investor I “unlinked” last year, after argued that Kolibri wouldn’t work, as otherwise other investors would have already funded it… Look in the mirror my (ex’d-)friend, why didn’t you bother to take a serious look at our numbers. Then that investor argued about me questioning greenwashing on some cool stuff they’ve invested in. Though hey, those investments were into fashionable, but unsustainable projects, with a negative impact on the people working in that industry and on the energy demands projected. The focus was on maximizing the “green show-off” and the financial profit expectation. The very same investor telling me that they expect above market returns on their investment. Yeah, sorry. But at the cost of their sustainability claims.

Reality Check: Forget about it.

Green vs. Grid Energy

Green vs. Grid Energy

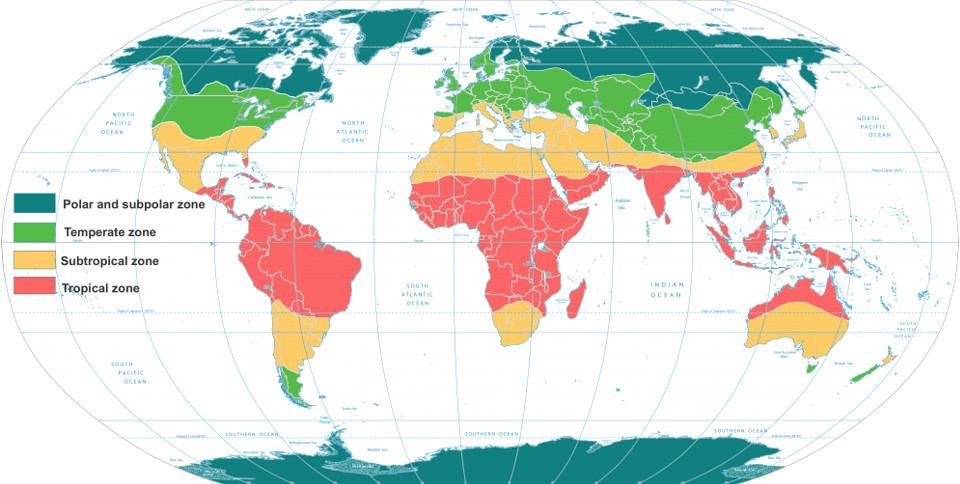

Did you know, why we want to start Kolibri in Southern Europe? I just plan to put solar panels on our family-owned house in Northern Germany. But the angle of sunlight is an stark contrast to Southern Europe. Whereas the cost for electric power is multiple times higher. But the lobbyists use that energy cost to disqualify SynFuels as “too expensive”. And they consider buying the power needed to generate it … from the grid.

Just like those impact and green-tech investments’ blind-eye, claiming they buy and use “green energy” … from the grid. Germany last year increased their creation of green energy, though at the same time increasing overall consumption, as well as their import of nuclear and fossil energy (31.9 TWh) [Source: German]. And there are unconfirmed but recurring and for me reasonable accusations that the energy companies sell by all practical means more green energy than is being produced.

Reality Check: Forget about it.

The CPP-Distraction

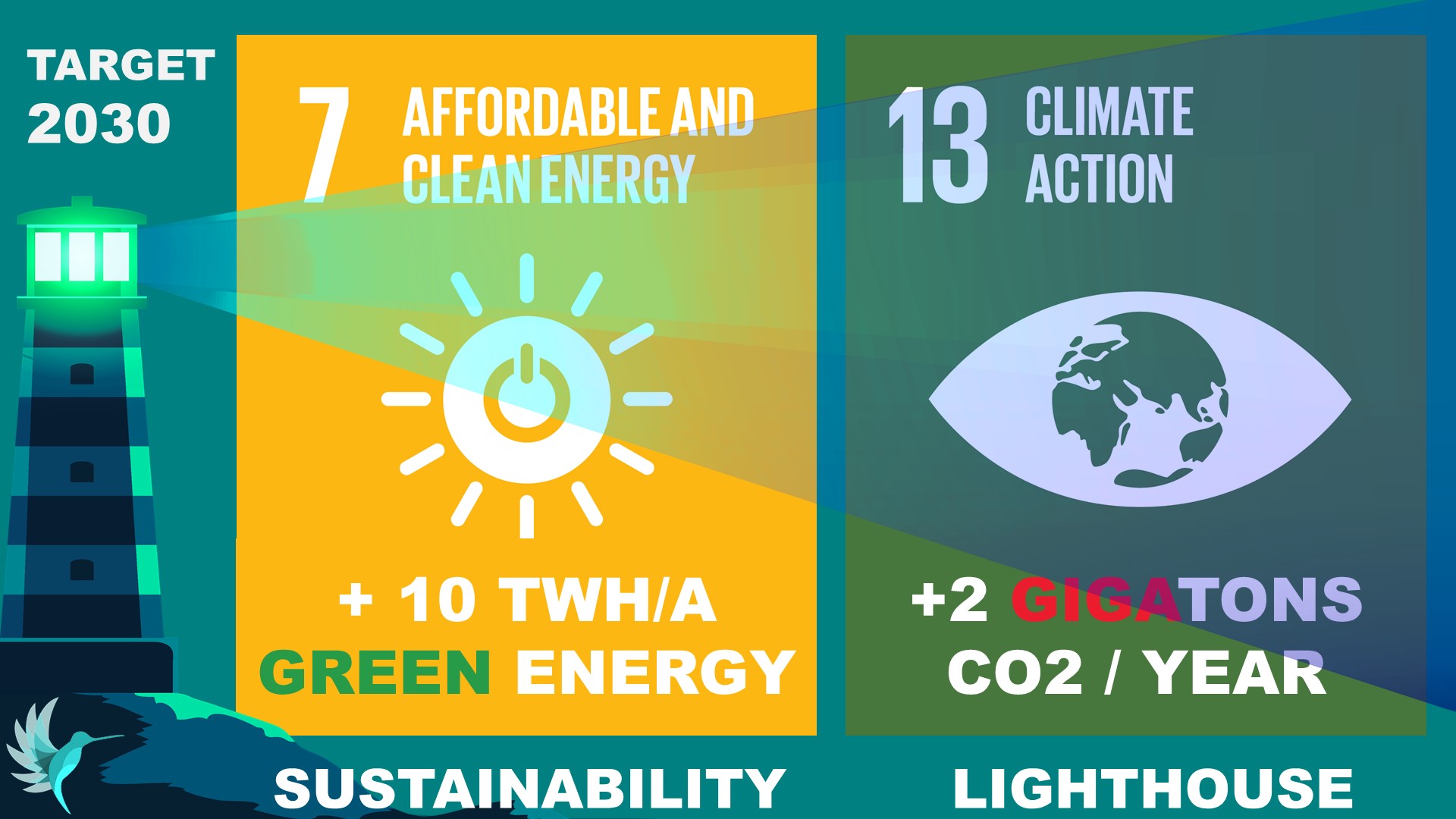

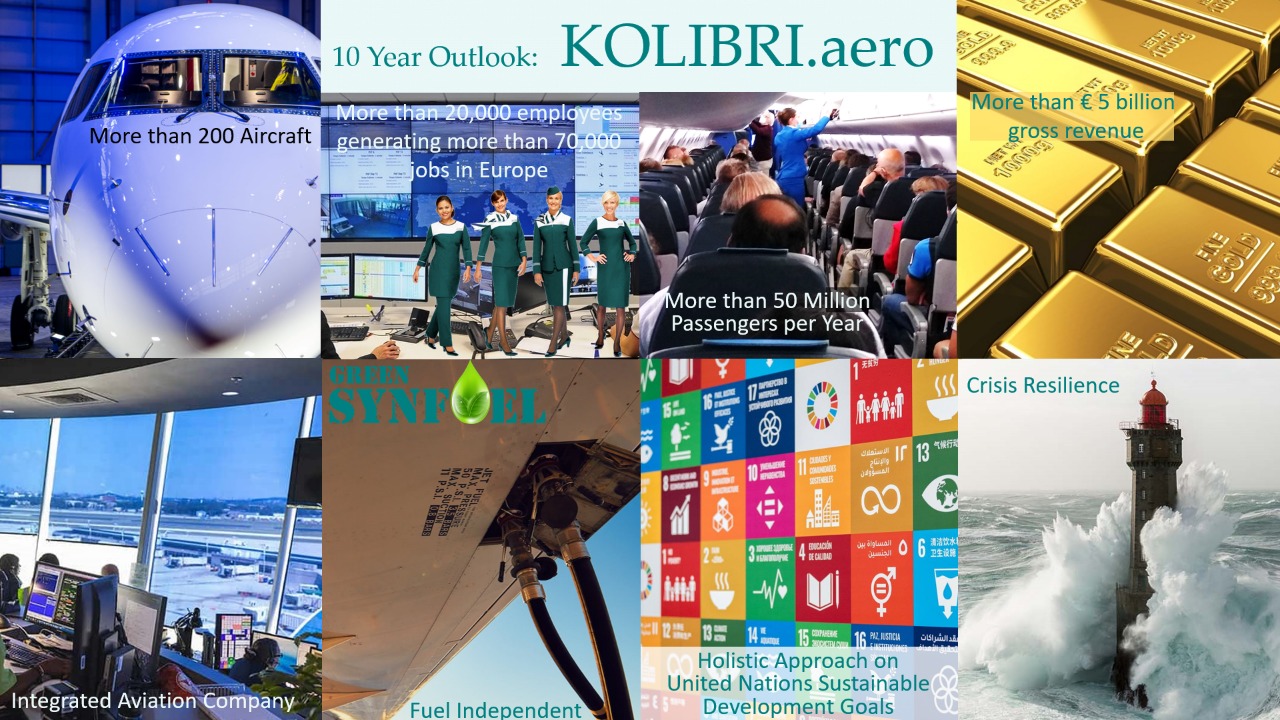

Though just as others, they keep denying to have any closer look at our hard numbers. Numbers qualifying a profitability of the venture within three, 100% fossil-flying within about seven to eight years – still in the 2035 range! And while they fancy themselves for focusing on a 200 MT CPP (megaton carbon-capture potential), we talk about one gigaton fossil-fuel replacement, so a 1 000 MT CPP. Not by 2035, but in 2035. Doubling within the following three years on our 10-year plans.

Though just as others, they keep denying to have any closer look at our hard numbers. Numbers qualifying a profitability of the venture within three, 100% fossil-flying within about seven to eight years – still in the 2035 range! And while they fancy themselves for focusing on a 200 MT CPP (megaton carbon-capture potential), we talk about one gigaton fossil-fuel replacement, so a 1 000 MT CPP. Not by 2035, but in 2035. Doubling within the following three years on our 10-year plans.

Yes, depending very much on the fact that we will need to convince more impact investors and the political stakeholders that we can do it and supporting the onward funding. But for that there are a lot of political investment projects seeking the developments, but requiring an already existing company. As others, “they don’t invest in ideas”. But in “Climate AI” and greentech, exponentially driving energy demands up.

Reality Check: Forget about it.

Sustainable, Climate-Friendly, Carbon-Zero vs. Fossil-Free

Some “correction” in wording – guilty as charged myself.

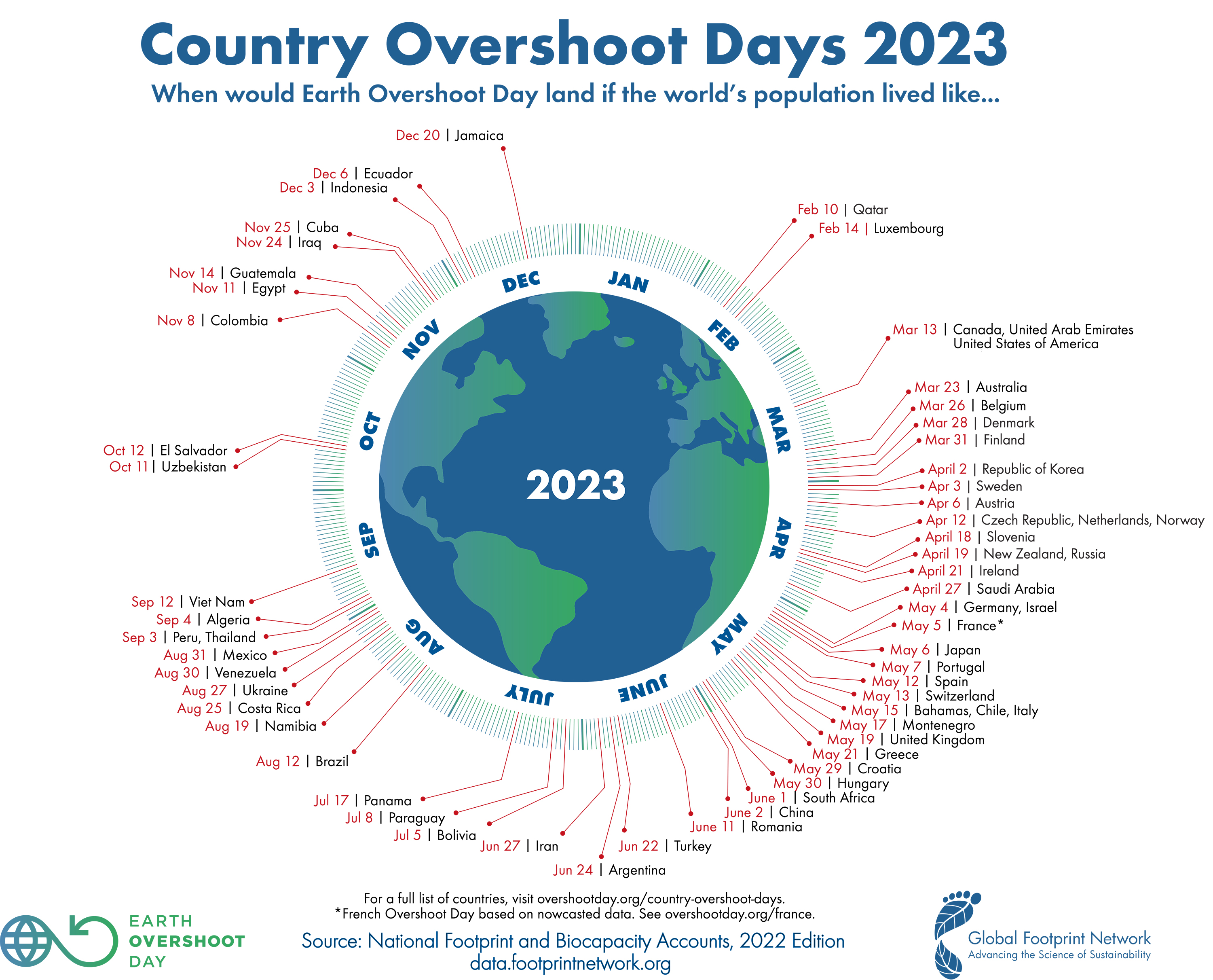

- Sustainable: All 17 United Nations Sustainability Development Goals. Not just Climate. Or People. Or Water. Or Air. All. Else it’s #cherrypickingsdgs

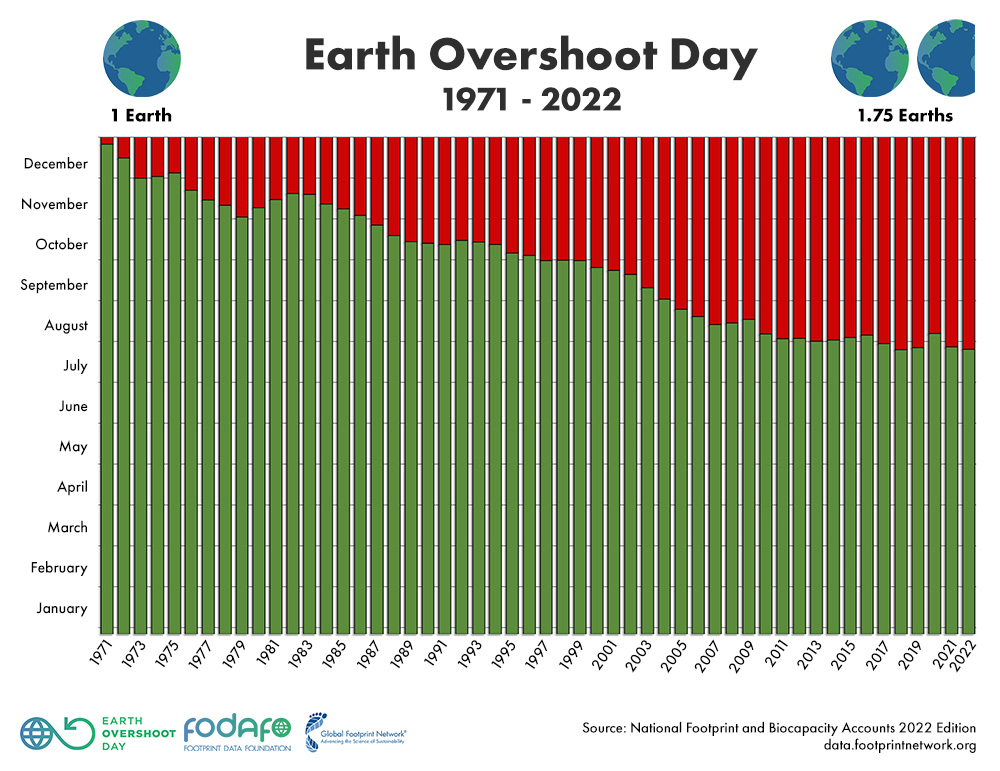

- Climate-Friendly: Focused on SDG #13 “Climate Action. We’ve busted the Paris-goal of 1.5°C global warming last year. And it goes very clearly in line with the growing energy consumption. So as I keep telling, SDG #7 (Energy) goes together with SDG #13. But rather normally is used for #cherrypickingsdgs

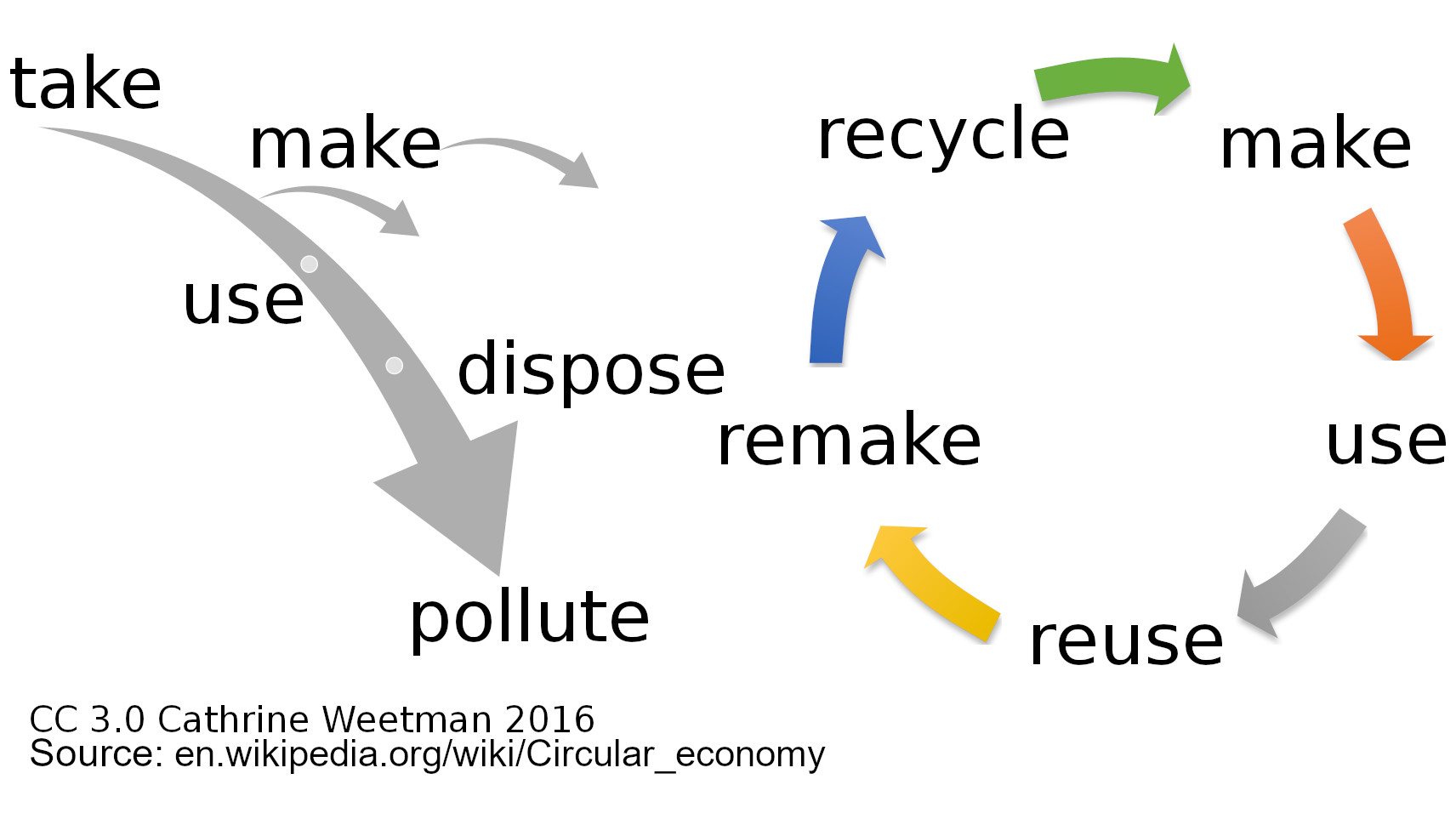

- Carbon-Zero, just like “circular economy” is usually a diversion, a ruse. Carbon-Zero means we use no Carbon. Doesn’t work. We must use Climate-Neutral in the sense that we don’t add more carbon (and what about the other greenhouse gases) to the athmosphere than we take it. In Circular Economy, it should mean that in the end, we return everything to nature what we took from it. All resources and energy. That would mean (but rarely is interpreted as such) that in a circular process, we take out food (being energy for us) and products from the cycle, but in the end must return it to where they have been taken from.

- Fossil-Free in my humble opinion is what we must focus at as a key priority! If we fly and drive fossil-free, that means electric cars are not driving on fossil-generated power any more. Airplanes don’t fly on fossil-based kerosene, nor fossil-based “sustainable aviation fuels.

Why I Keep Fighting to Fund Kolibri

Yes, it does require both bold ideas, bold strategy and bold investment to make those ideas happen. Overcoming the Sustainability Energy Dilemma. Flying 100 jet aircraft across Eurpope 100% fossil-free in seven years, 200 within 10 years. No cherry-picking of the SDGs but holistically sustainable. Like. No-one is left behind!

Yes, it does require both bold ideas, bold strategy and bold investment to make those ideas happen. Overcoming the Sustainability Energy Dilemma. Flying 100 jet aircraft across Eurpope 100% fossil-free in seven years, 200 within 10 years. No cherry-picking of the SDGs but holistically sustainable. Like. No-one is left behind!

Hm. Yes, we have such ideas and a strategy that is based to secure profitability first to justify the further investment. So after one year of investment to set up company and start operations being able to pay back the investment with an ROI after another two years. Though a real impact investor wouldn’t just want to launch and cash-in. A real impact investor (listening to us) would understand and believe in the lighthouse impact we’d have even across other industries. A real impact investor would want to join us for the journey.

It’s the real thing. It requires an industry scale investment to start an airline with competitive cost per seat. No cheap-crap small, fancy-looky-looky investing. But you get what you pay for. Including the ROI. Though yes, I also had a nice discussion this week on a comment of mine asking: Define “Return”. Or “Shareholder Value”. As they are usually rather different and far less one-dimensional than usually implied. Mine always has been and is: Do the right thing.

Food for Thought

Comments welcome:

![“For those who agree or disagree, it is the exchange of ideas that broadens all of our knowledge” [Richard Eastman]](https://foodforthought.barthel.eu/wp-content/uploads/2016/08/eastman_quote.jpg)

Again, I assume it’s something you heard from me before. For many years now, I restricted myself to 10 (ten) mailing lists. The RSS-feeds mostly dried out anyway. But I found it interesting to how many mailing lists I got “signed up”. No, I didn’t do myself. I simply got added to. Got to be kidding I thought when my (intentionally) unfiltered inbox (previously filtered) for those six weeks flooded my new mail app with 13 000 e-Mails. Excuse me? On about 45 days, that’s more than 280 mails a day?

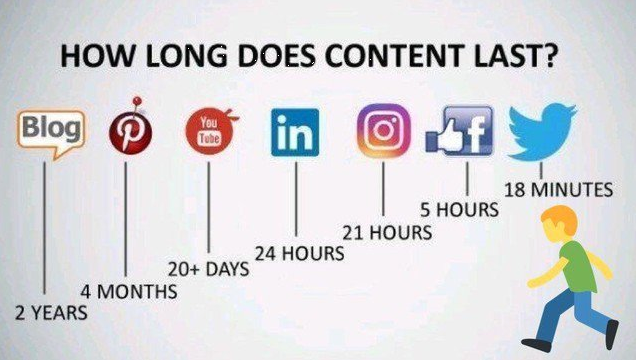

Again, I assume it’s something you heard from me before. For many years now, I restricted myself to 10 (ten) mailing lists. The RSS-feeds mostly dried out anyway. But I found it interesting to how many mailing lists I got “signed up”. No, I didn’t do myself. I simply got added to. Got to be kidding I thought when my (intentionally) unfiltered inbox (previously filtered) for those six weeks flooded my new mail app with 13 000 e-Mails. Excuse me? On about 45 days, that’s more than 280 mails a day? Every day, I already limited my activity to LinkedIn to two hours a day. Before. Now those weeks, I made those two hours about every three to four days. And found I may have missed out thousands of “news” in my feed. But I started reaching out one-on-one which turned out rather more productive. Including feedback that those people from my network have not seen much of my posts in the past months. So what was that back in 2020 about the half-life of social media information?

Every day, I already limited my activity to LinkedIn to two hours a day. Before. Now those weeks, I made those two hours about every three to four days. And found I may have missed out thousands of “news” in my feed. But I started reaching out one-on-one which turned out rather more productive. Including feedback that those people from my network have not seen much of my posts in the past months. So what was that back in 2020 about the half-life of social media information?

So while no longer prioritizing LinkedIn or the blog, I will keep writing the blog, for the mentioned reason. To summarize and organize my own thoughts. I also plan to experiment with a VLOG. But that’s neither on my priority list. So far I use my little studio for web-calls (WhatsApp, Google Meet, Zoom, etc.). Let’s see how that will go.

So while no longer prioritizing LinkedIn or the blog, I will keep writing the blog, for the mentioned reason. To summarize and organize my own thoughts. I also plan to experiment with a VLOG. But that’s neither on my priority list. So far I use my little studio for web-calls (WhatsApp, Google Meet, Zoom, etc.). Let’s see how that will go.

This is another example how the

This is another example how the

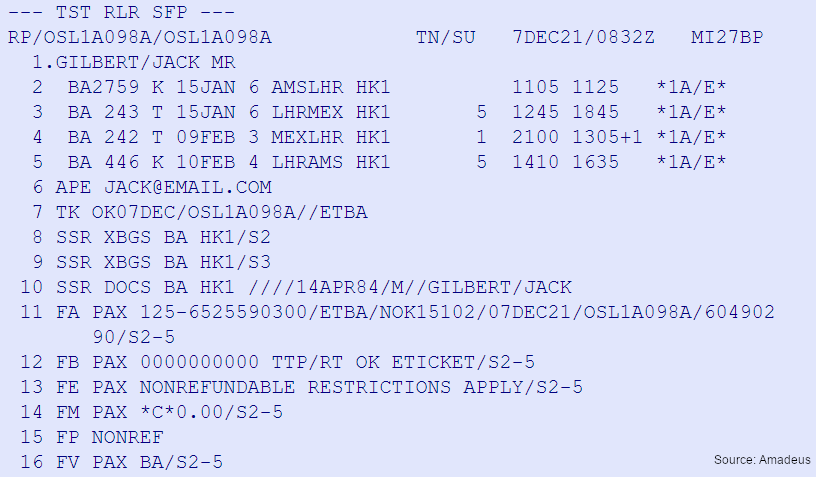

While this is so long ago, many in our industry have forgotten that SABRE was the first computerized global network that allowed us long before the World Wide Web to go into a travel agency somewhere and book flights, later hotels and other travel services on the other side of the world. When I entered the industry back in 1987 at

While this is so long ago, many in our industry have forgotten that SABRE was the first computerized global network that allowed us long before the World Wide Web to go into a travel agency somewhere and book flights, later hotels and other travel services on the other side of the world. When I entered the industry back in 1987 at !["Do something about it when something "smells funny". Even if it's not on your job description, IT'S YOUR JOB." [Henna Inam]](https://foodforthought.barthel.eu/wp-content/uploads/2019/02/Inam-Henna-Its-Your-Job.jpg) Together with a colleague I became responsible point of contact for airlines, managing, explaining and mitigating the “booking discrepancies” in a pre-online world, when bookings were transferred by teletype (a telex like, but automated system), not in real time. Only inside Amadeus, real time was “normal”. After some years, the internal network of which I wasn’t part of established a “Product Management Flight”, taking over my colleagues and my responsibilities… By the time I’ve become a member of the local Airline Sales Representatives Association (ASRA), though that suddenly was considered as an overstepping on my responsibilities. Something I found and find a statement of total bureaucratics’ thinking. Many years later, that was why Henna Inam’s statement resonated so well with me.

Together with a colleague I became responsible point of contact for airlines, managing, explaining and mitigating the “booking discrepancies” in a pre-online world, when bookings were transferred by teletype (a telex like, but automated system), not in real time. Only inside Amadeus, real time was “normal”. After some years, the internal network of which I wasn’t part of established a “Product Management Flight”, taking over my colleagues and my responsibilities… By the time I’ve become a member of the local Airline Sales Representatives Association (ASRA), though that suddenly was considered as an overstepping on my responsibilities. Something I found and find a statement of total bureaucratics’ thinking. Many years later, that was why Henna Inam’s statement resonated so well with me. On research for the ASRA on my second “Airline Sales & e-Commerce”-presentation, a series covering GDS, Online Services like AOL or CompuServe, but also already the new “World Wide Web” (WWW), that ran annually for some 15 years, on the WWW which I still then accessed via a then new link by CompuServe, I stumbled across a single form field on a website that called itself the “Internet Travel Network”. It was really pioneering days, the Internet being something for student freaks… The form took a Sabre-command and returned the result, usually a flight availability. Or for the smarter of us also an air fares analysis result.

On research for the ASRA on my second “Airline Sales & e-Commerce”-presentation, a series covering GDS, Online Services like AOL or CompuServe, but also already the new “World Wide Web” (WWW), that ran annually for some 15 years, on the WWW which I still then accessed via a then new link by CompuServe, I stumbled across a single form field on a website that called itself the “Internet Travel Network”. It was really pioneering days, the Internet being something for student freaks… The form took a Sabre-command and returned the result, usually a flight availability. Or for the smarter of us also an air fares analysis result. In 1996, some four or six weeks before a milestone that changed our industry, we did by mistake do test bookings in the real-world system and booked up about a hundred Lufthansa flights with travelers called Test Tester… While that was far enough in the future and we could resolve the issue with Lufthansa, we were approached by Amadeus, that it was not acceptable to abuse their system like this and they would never, never ever approve of someone doing bookings on Amadeus through a web-page!! No f***ing way! Oh yes, we were in big trouble.

In 1996, some four or six weeks before a milestone that changed our industry, we did by mistake do test bookings in the real-world system and booked up about a hundred Lufthansa flights with travelers called Test Tester… While that was far enough in the future and we could resolve the issue with Lufthansa, we were approached by Amadeus, that it was not acceptable to abuse their system like this and they would never, never ever approve of someone doing bookings on Amadeus through a web-page!! No f***ing way! Oh yes, we were in big trouble. A friend, I came to trust, just recently called me a “visionary”, something I never call myself. When I learned the bells and whistles of “Economics” (Whole Sale & Foreign Sales), my instructor on business education was the boss of a large whole sale logistics center. He taught me to always think things through. What will be the repercussions of buying from the cheapest? Your product will loose in quality. But, he instilled that in me: There is always someone cheaper out there. And he also emphasized and taught me to leave the comfort zone of “we have always done it that way”. We must think outside the box and constantly strive to be better.

A friend, I came to trust, just recently called me a “visionary”, something I never call myself. When I learned the bells and whistles of “Economics” (Whole Sale & Foreign Sales), my instructor on business education was the boss of a large whole sale logistics center. He taught me to always think things through. What will be the repercussions of buying from the cheapest? Your product will loose in quality. But, he instilled that in me: There is always someone cheaper out there. And he also emphasized and taught me to leave the comfort zone of “we have always done it that way”. We must think outside the box and constantly strive to be better.

OAG summarized on the

OAG summarized on the  This is one reason, I do not believe we can make

This is one reason, I do not believe we can make  To date, I am still working with consulting companies reviewing airline business plans. Aside the usual failure issues, size is a recurring issue. Another being the lack of fallback in case of flight disruptions, may they be caused by technical issues, weather or other events. Their focus on cheap “human resources” and missing team building results in friction and internal competition that further weakens their product offering.

To date, I am still working with consulting companies reviewing airline business plans. Aside the usual failure issues, size is a recurring issue. Another being the lack of fallback in case of flight disruptions, may they be caused by technical issues, weather or other events. Their focus on cheap “human resources” and missing team building results in friction and internal competition that further weakens their product offering.!["Our Obsession with technology will slow down the green transition.” [Lubomila Jordanova]](https://foodforthought.barthel.eu/wp-content/uploads/2021/11/Jordanova-Lubomila-Techfocus-slows-down-green-transition.jpg) Having been reminded again of

Having been reminded again of

In 2008, I developed another “disruptive” idea of a hydrogen-powered WIG, to promote the need to think sustainable on a global aviation conference. While it made it through viability study into serious negotiations by a tropical government and a major green fund, it fell victim to Lehman, but I still think it should have been developed. Though since

In 2008, I developed another “disruptive” idea of a hydrogen-powered WIG, to promote the need to think sustainable on a global aviation conference. While it made it through viability study into serious negotiations by a tropical government and a major green fund, it fell victim to Lehman, but I still think it should have been developed. Though since

Another issue that keeps coming up in my discussions is that we must stop competing on sustainable solutions. This is a major, not even just an industry or generational challenge. It’s a global one. So let’s stop competing and start joining forces! Back to my example of offshore wind farms and tidal energy turbines. Why not using them side-by-side in the same sea region we anyway impact by building those humongous wind farm structures? Why not using old Oil Rigs to apply tidal energy turbines, clean them, make them an artificial island structure for sea life (also arial one)?

Another issue that keeps coming up in my discussions is that we must stop competing on sustainable solutions. This is a major, not even just an industry or generational challenge. It’s a global one. So let’s stop competing and start joining forces! Back to my example of offshore wind farms and tidal energy turbines. Why not using them side-by-side in the same sea region we anyway impact by building those humongous wind farm structures? Why not using old Oil Rigs to apply tidal energy turbines, clean them, make them an artificial island structure for sea life (also arial one)?

While we now suffer from decades of management misconception that everything must be subordinate to (quick) financial profit and that profits justify the means, we now start to recognize that “sustainability” must be a “shareholder’s value”, as long as “long-term success” (viability). My friends and audience do know I questioned the pure financially focused “shareholder value” for the past 25 years at minimum. And as an economist by “original” profession, I question all those dreamworld models that burn money in the next big hype.

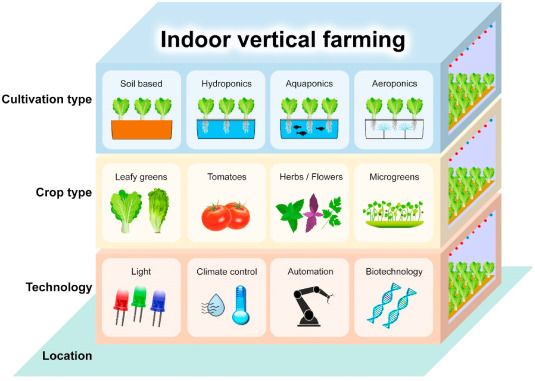

While we now suffer from decades of management misconception that everything must be subordinate to (quick) financial profit and that profits justify the means, we now start to recognize that “sustainability” must be a “shareholder’s value”, as long as “long-term success” (viability). My friends and audience do know I questioned the pure financially focused “shareholder value” for the past 25 years at minimum. And as an economist by “original” profession, I question all those dreamworld models that burn money in the next big hype. Given the current droughts and considering circular economy, thinking about greenhouses filling entire regions in Spain, I think we will need to invest into vertical farming. Given a “closed system” to improve the water usage. Reduce use of chemical fertilizers, herbicides and pesticides. Discussing with a startup recently, I was surprised on the efforts on seed sequence. Not for the plant or the soil, but to make sure the bees they use at all times find sufficient nectar.

Given the current droughts and considering circular economy, thinking about greenhouses filling entire regions in Spain, I think we will need to invest into vertical farming. Given a “closed system” to improve the water usage. Reduce use of chemical fertilizers, herbicides and pesticides. Discussing with a startup recently, I was surprised on the efforts on seed sequence. Not for the plant or the soil, but to make sure the bees they use at all times find sufficient nectar.

There are many investments into small-scale change, with a focus on two to three years. That by itself should be an issue of concern for any real impact investor. As for climate change and sustainability that can only be a start. What is the 10 year outlook? What impact will it make by 2050?

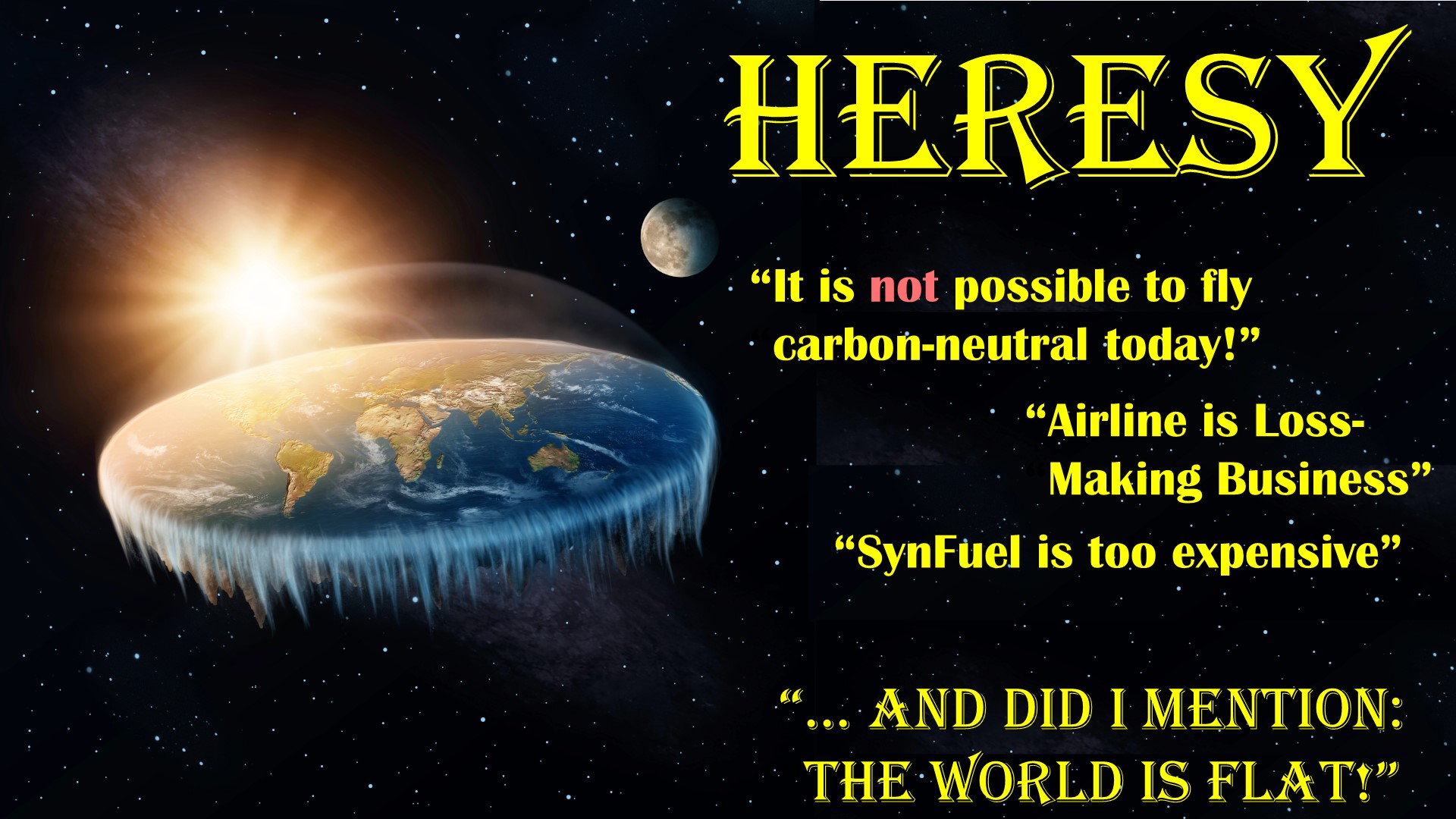

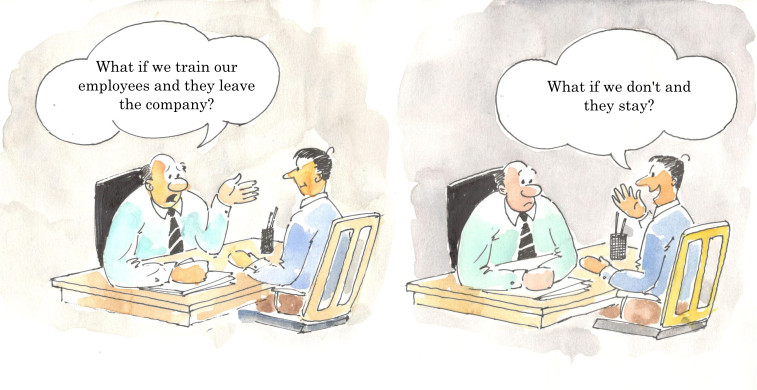

There are many investments into small-scale change, with a focus on two to three years. That by itself should be an issue of concern for any real impact investor. As for climate change and sustainability that can only be a start. What is the 10 year outlook? What impact will it make by 2050? One of the main concerns we are faced with at Kolibri is our approach to sustainability. First of all, why would an airline turn sustainable, it’s heresy, ain’t it? And why would we pay salaries above country average? Maybe, because they are sustainable and secure the people’s motivation and loyalty?

One of the main concerns we are faced with at Kolibri is our approach to sustainability. First of all, why would an airline turn sustainable, it’s heresy, ain’t it? And why would we pay salaries above country average? Maybe, because they are sustainable and secure the people’s motivation and loyalty?

Is your job “system relevant”? If you work in home office, I can tell you the answer is No. If you work in consulting, I can very likely tell you the answer being No. Working in aviation and transport, the answer very likely is No. And if your salary is above average, the answer also very likely is No.

Is your job “system relevant”? If you work in home office, I can tell you the answer is No. If you work in consulting, I can very likely tell you the answer being No. Working in aviation and transport, the answer very likely is No. And if your salary is above average, the answer also very likely is No. My “intern” boss (again) taught me respect for everyone. The guy on the fork-lift, the cleaners, truck drivers and “secretaries” (yeah, we still had those). He taught us to set up the coffee when it was empty and not bother the secretaries. To clean up ourselves to make the cleaners’ jobs easier. To think beyond our petty box as “office workers” and value the hard work of the real workers. Also to question, but then also embrace the value of our work. IF we added value.

My “intern” boss (again) taught me respect for everyone. The guy on the fork-lift, the cleaners, truck drivers and “secretaries” (yeah, we still had those). He taught us to set up the coffee when it was empty and not bother the secretaries. To clean up ourselves to make the cleaners’ jobs easier. To think beyond our petty box as “office workers” and value the hard work of the real workers. Also to question, but then also embrace the value of our work. IF we added value.