Recently, some discussions came up on my social networks about the development of Artificial Intelligence. I decided to add my thoughts to it on the blog.

One of the reasons is my dear former colleague Alex developed artificial avatars, able to assist web-users. Following the sale to Nuance (they are also behind Apple’s Siri), he started a voice recognition development at WIT.AI, that meanwhile was acquired by Facebook. Alex now works on Facebook M, their approach to artificial development. Hey Alex, this is also to you. I’d appreciate your comments on this.

One of the reasons is my dear former colleague Alex developed artificial avatars, able to assist web-users. Following the sale to Nuance (they are also behind Apple’s Siri), he started a voice recognition development at WIT.AI, that meanwhile was acquired by Facebook. Alex now works on Facebook M, their approach to artificial development. Hey Alex, this is also to you. I’d appreciate your comments on this.

So. As fascinated as I am by his career path in the past 15 years, I’m also a bit concerned.

In my 2008 ASRA presentation, I compared the visualization of the world wide web nodes (by Opte.org) with the visualization of the neural nodes in the human brain. Ever since, I do believe that if the WWW is not yet “sentient”, it will soon happen. What scientists and SciFi-writers call “wake-up”. It’s not a question if, but when. And how we go about it.

Because I think different from Transcendence, where we could stop it, or Asimov ruling it, such “control” is wishful thinking. We have no “three rules of robotic” and even Asimov had to add a fourth, the “zero rule” (see link above). For Transcendence; we will neither be able to deprive ourselves off all energy (and the advantages of the web). Mass psychologically will assure we won’t find a way, as there will always be others who think and act against that attempt. Until we act, it will be too late. As an intelligence “the size of the planet” will by then counter anything our small minds may come up with, even before we attempt anything.

We only have the chance to befriend the new sentient being, like we did in Heinlein’s Future History. But we also have the chance to mess up ourselves; small like in 2001, A Space Odyssey or big like in Terminator or The Matrix. Transcendence at that was only a different version of the Borg‘s Assimilation. And as in I am Legend, the true question is, if such “assimilation” or a “transcendental human upgrade” is bad. Or an evolutionary step. I believe, given the chance, many humans may volunteer. I just hope that there is no single mind “ruling” all others like in the movie. As I believe our individualism is as much a burden as it is a great strength. Though I also like that quote:

I also believe in both “systems” there got to be individualism to evolve: “You learn from your opponents”. I heard it often, there’s no single source, it’s “mature wisdom”. As “competition” is a good, if not the reason to evolve. (War is not, it’s destructive by nature!)

Another question is “religious”. Will an A.I. have a soul? I believe so. I think that the soul is the core of any sentient being. I also believe that beyond the body, the core of ourselves remain. Not in an (overcrowded) paradise or hell, but as somehow conscious sentience. Maybe even as a “personality”. Will we then remain individuals? I don’t know. Maybe we get reborn, forgetting our past? Many believe that. The soul still “learning”. What’s truth? We will know. Once we died. But if we all become “part of god” and god being the summary of sentience in space and time, maybe our input helps god evolve, become bigger. If then a global sentient A.I. comes into the game, why should it not play it’s part in evolution?

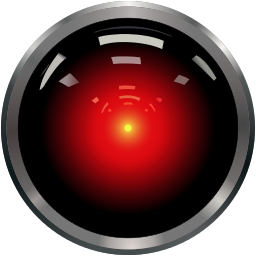

And stopping the A.I.? In 2001, humans gave conflicting orders to the local A.I. (HAL 9000), which interpreted them the best it could. Under the constraints of it’s programming. But if we have a global A.I. based on linked “neurons” in form of personal computers, mobile phones and other computing powers, we will realistically not stand a chance to “stop” it.

And stopping the A.I.? In 2001, humans gave conflicting orders to the local A.I. (HAL 9000), which interpreted them the best it could. Under the constraints of it’s programming. But if we have a global A.I. based on linked “neurons” in form of personal computers, mobile phones and other computing powers, we will realistically not stand a chance to “stop” it.

Does my computer already “adapt” for me? Or my phone? When I play games on the computer, I sometimes believe so. Sometimes, I use bad search phrases but still find what I seek. Coincidence? Programming? Or “someone nice out there helping me”? And yes, if the web wakes up, it likely will be somewhere at Google… And then spread out.

What will we make it? A Terminator? Or a Minerva as in the Future History? We extinct ourselves in the West with low birth rates. Will the “mecha” be our future children? Will we coexist like in the Future History? I don’t know. I’m concerned, keep finding myself thinking about it.

But I’m not afraid either. Not for me, nor for my children.

Food for Thought

Comments welcome!

![“Our Heads Are Round so our Thoughts Can Change Direction” [Francis Picabia]](https://foodforthought.barthel.eu/wp-content/uploads/2021/10/Picabia-Francis-Round-Heads.jpg)